How to Prevent Crippling Your Infrastructure When AWS US-EAST-1 Fails

23.10.2025

•

Stijn De Haes

Practical lessons on designing for resilience and how to reduce your exposure in case of a major cloud outage.

At Dataminded, we build and operate data platforms for organizations that rely heavily on the cloud. When AWS us-east-1 went down this week, our teams and clients experienced the impact firsthand. In this post, Stijn de Haes, Tech Lead for our Conveyor accelerator, shares practical lessons on designing for resilience — what worked, what didn’t, and what every engineering team can learn from this incident.

—

On 20 October 2025, AWS experienced a major outage in the N. Virginia (us-east-1) region, impacting more than 140 services across the platform. Many workloads hosted in other regions were also affected, due to hidden dependencies that still routed through us-east-1.

It was a tough day for the people behind the scenes. While headlines focused on outages and downtime, engineers, SREs, devops and infrastructure teams were working under immense pressure to restore services. Anyone who’s ever been on call knows that moment when dashboards turn red and alerts don’t stop. We’ve been there too, and our respect goes out to everyone who spent the day firefighting to keep things running.

At Dataminded, several of our clients felt the impact in different ways. Those using Conveyor, our managed data-engineering platform, remained partially operational, while other AWS clients not on Conveyor experienced full downtime. As the tech lead of Conveyor, I’ve seen other AWS outages over the years, and we’ve reduced our dependency on AWS US-EAST-1 accordingly, so we’ve been now minimally impacted.

In this post, I want to share what helped reduce our exposure, and what we’re improving after this latest incident. I also talked to Jonny Daenen for his TechEx video series, see our video interview below.

1. Use Regional IAM Endpoints

AWS Identity and Access Management (IAM) is a global service, and so many applications depend on the us-east-1 region for authentication. When that region fails, authentication can fail too, even for workloads running elsewhere.

The fix is simple: use regional STS endpoints instead of the default global one. The STS endpoint is used by your application to fetch IAM tokens.

All modern AWS SDKs support this environment variable. Setting it explicitly ensures that your application uses the local region’s STS endpoint rather than falling back to us-east-1. Also new SDK major versions releasing after July 2022 will default to regional , so you might already use it by default. In my opinion setting the value to ensure the regional endpoint is used is best practice, as it reduces the need to check if your SDK defaults to regional.

At Conveyor, a scheduler and runner for data applications, we automatically set this variable for all scheduled jobs so customers are protected by default. This reduces dependency on us-east-1 for runtime operations, though updating IAM roles during an outage may still be impossible.

2. Mirror Public ECR Images

AWS Public ECR (Elastic Container Registry), introduced in 2020, quickly became a shared dependency, especially for EKS add-ons and public container images. Because it’s a global service, it’s hosted in us-east-1, and when that region goes down, image pulls can fail. Many AWS customers depend on it, either at runtime or build time of their applications. In my view, we should try to at least remove the runtime dependency on AWS Public ECR.

The solution is rather easy to explain, but might take you a while to implement. Just ensure you have a copy of the public ECR images in your private ECR registry, and use that copy. You can even have a copy in multiple regions.

So to reduce exposure:

Use ECR pull-through caching, or

Periodically pull and push images to your own private ECR,

Ideally keep copies across multiple regions.

You will need to ensure you are using the private ECR copy, and this is where most of the work will be, trying to find all uses of public ECR. And it’s easy to miss a dependency. During this outage, we found one image that still pointed to Public ECR — a leftover from an older migration. We’re now adding automated checks to ensure no running containers depend on external registries. So I would highly recommend to invest in a script that checks your running container images to ensure no public ECR images are present.

3. Remove External Registry Dependencies

While reviewing ECR, it’s worth checking other registries such as Docker Hub or Quay.io. Many rely on AWS infrastructure themselves, creating indirect dependencies. You probably don’t want to depend on them anyway, some have rate limiting on pulls and most don’t provide an SLA on their free services.

We’ve seen this before:

A Google Cloud outage once disrupted AWS workloads because containers were pulled from the kubernetes registry which is hosted on GCP. This impacted people running kubernetes across the world.

During the October 20 incident, some Azure environments were affected when dependencies on Quay.io failed (such as our network provider Cilium)

This was unexpected and we will be ensuring we don’t depend on any external registries any more. Our plan is to host all critical images privately, with monitoring to detect any unexpected external pulls.

4. Prepare for When Things Break

Even with solid architecture, incidents will happen.

Here are a few practices that help us stay calm and recover faster at Dataminded

Maintain a Disaster Recovery Plan

Ours covers:

Notification & Activation — identify issues, alert key people, assess damage

Recovery — restore services using backups or secondary sites

Reconstruction — move back to primary infrastructure once stable

Run tabletop exercises

A table top exercise is a simulation of an outage. The purpose is that everyone knows what to do when alarms go off. It ensure that everyone is familiar with procedures and helps everyone to remain calm when shit hits the fan. It also helps to find gaps in the disaster recovery plan.

Discuss risk tolerance with leadership.

Not every system needs the same level of redundancy. Discuss with your management what your availability needs are, that way you can make conscious decision on which risk are tolerable and which aren’t.

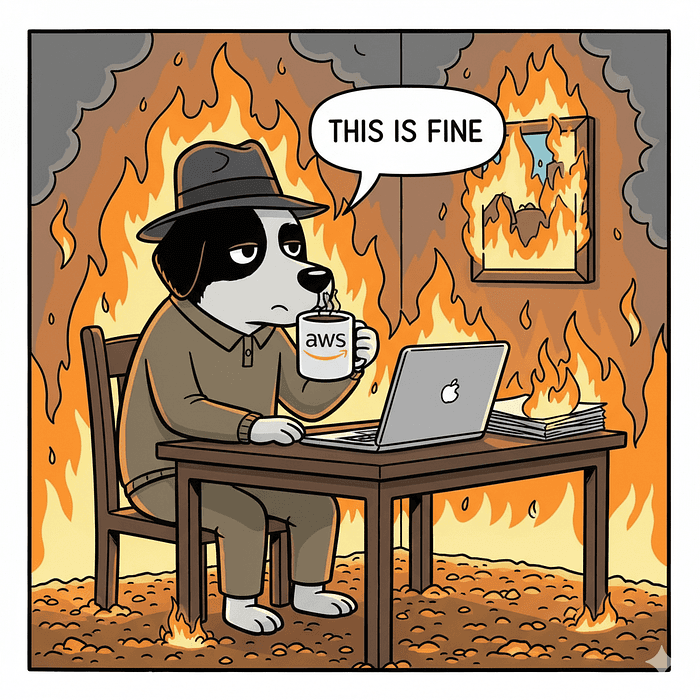

N. Virginia (US-EAST-1) is on fire, and the whole world knows

Review past incidents.

Workshops based on real outages help teams anticipate weak points and build confidence. Have a look at which kind of outages have happened in the past and have a workshop with your time to find out how they might impact you. This will help in 2 ways: You will be able to reduce dependencies on such an outage, and everyone will be more confident to figure out issues next time something happened

Conclusion

It’s hard to remove all dependencies on us-east-1, but it’s entirely possible to reduce your blast radius:

✅ Use IAM regional endpoints

✅ Mirror public ECR images to private registries

✅ Eliminate runtime dependencies on external registries

✅ Test and rehearse recovery plans regularly

The AWS us-east-1 outage showed once again that resilience is both technical and human. Good engineering reduces impact, but it’s the people behind the screens who carry the weight when things fail. Here’s to everyone who worked through that day to keep systems running.

Latest

You Don’t Have a Data Platform Without Excel

The Most Used Feature is “Export”: Why Your Data Stack Needs a Spreadsheet Strategy

How Knowledge Graphs, Dimensional Models and Data Products come together

In a data-driven organisation data assets should be modelled in a way that allows key business questions to be answered.

Using AWS IAM with STS as an Identity Provider

How EKS tokens are created, and how we can use the same technique to use AWS IAM as an identity provider.